Paper review: a meta-analysis of physician-led pre-hospital care

In which we find a couple of issues in a viral article, and highlight some challenges in pre-hospital research

This post touches on two of my favourite topics: statistical analysis, and emergency pre-hospital care. It was inspired by one of my favourite regular listens: The Worlds Okayest Medic Podcast, who did an episode on the paper and raised some thoughtful questions about the study and its conclusions. As a data scientist with an interest in the topic, I wanted to have a look myself.

Disclaimer: I am not a paramedic or medical professional. I am a volunteer EMR and full time data scientist.

The article in question is Benefits of targeted deployment of physician-led interprofessional pre-hospital teams on the care of critically Ill and injured patients: a systematic review and meta-analysis.

The paper follows a pretty standard meta-analysis workflow with all the complications that entails, and there is not much in the way of fancy statistical analysis. I only got around to digging into the first outcome, mortality within 30 days. As with any meta-analysis, the paper itself is a top layer, and there is a lot of important detail in which studies were included and why (or not).

TLDR: In looking at the first outcome I find a couple of issues with the included studies that call into question the rigour of this article. More time needed to look at the second outcome and the paper as a whole.

First impressions

The first thing that jumps out to me is the choice of countries included. It is a bit of a strange mix of a handful of European countries, as well as Australia and Japan. There is a bias towards English-speaking countries as they only selected papers published in English. From personal experience living in both English-speaking and continental European countries, I know that pre-hospital care structures can vary significantly. Many parts of Europe have a two-tiered system:

BLS ambulances staffed by EMTs.

ALS ambulances staffed by either a nurse, or a physician with postgraduate emergency care specialisation.

By contrast, the UK, Ireland, and Australia use a model more similar to the U.S., with ALS ambulances staffed by Paramedics or Advanced Paramedics.

This leads to an important question: What exactly are we comparing here?

The paper describes the comparator as:

The care provided in the comparator "non-physician" arms of the studies was generally of a high level of care provided by highly educated clinicians. In 22 of the 23 studies, non-physicians provided advanced life-support (ALS) in the comparator arms. Most countries staffed non-physician ambulances with emergency medical technicians (EMT) and ALS providers, most referred to as "Paramedics" or an equivalent translation.

If the standard ALS ambulance in France (for example) is already physician-led, and paramedics don’t exist in this system, what are we comparing them too? Digging into the Yeguiayan et al., 2011 paper shows that the comparator is non-SAMU fire-based BLS care. In France ‘standard care’ is really a combination of BLS and often physician-staffed ALS units (this is captured in the Appendix to the original meta-analysis paper as well). Notably, this study has one of the largest effect sizes favouring physician-led teams—raising the question of whether it should have been included. The comparison is quite different to all of the other papers, in that it compares BLS+ALS to just BLS.

Looking then to the two papers which have largest weight in the analysis of mortality, both by Endo et al, these come from Japan. A quick look at the Japanese EMS system shows that while they do indeed have paramedic-led standard care, the culture they operate in is quite different to those of Europe, with a large dependency on remote medical control before performing more advanced interventions.

It is noted that for the Finnish study (Pakkanen et al 2019), that paramedics have limited scope in regard to medications given related to intubation. Notably, in this study the physicians are specifically anaesthetists and much attention is given to their high instances of successful intubation, suggesting perhaps that they were introduced to address a specific need within this system.

All of this suggests that the definition of the comparator arm here is rather loose and heterogeneous, which will raise some questions about the conclusions.

Included studies

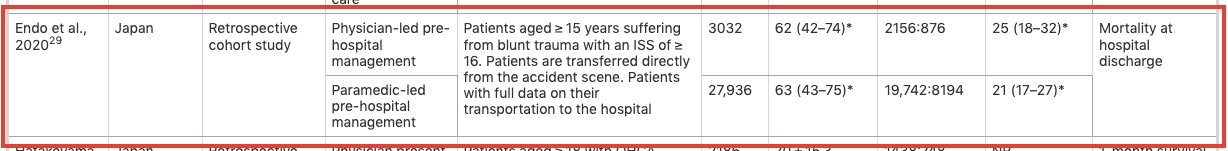

This part is problematic. When reviewing the list of included studies I noticed that the two studies from Endo et al, have similar patient counts, description of study population, and very similar outcomes. I wondered if there was some overlap in the study populations used for the two studies.

Here are the results for the mortality outcome, highlighting the Endo studies.

Digging into the references shows that the 2020 paper has no journal reference so this may be a pre-print.

Following the link confirms this, and that the paper went through major revisions and was finally published in 2021. Following the published link brings us to…. the other paper published Jan 06 2021. D’oh!

This means that the Endo 2020 study is essentially a duplicate of the published Endo 2021 study, with some modifications to the study population, leading to minor differences in the outcome. Including both in the meta-analysis is problematic because it artificially inflates the weight of that study. Therefore the Endo 2020 paper should not be included.

An alternative analysis

To explore the impact of these issues, I re-ran the analysis removing both the French paper and the Endo preprint. I extracted the data from the graph using ChatGPT (something it’s very good at!).

First I tried to replicate the original analysis in R. The methods section only specifies that a random effects model was used. I assume they use the standard REML to estimate the inter-study variance. My results were slightly different and with wider confidence intervals:

Original study: OR = 0.80 (0.68, 0.91)

My replication: OR = 0.79 (0.67, 0.92)

I attached the code and data at the end of the blog in case you want to try it yourself.

Now I re-run the analysis, dropping the Endo pre-print and the French study. We see that there is still a statistically significant result in favour of the physician led teams, but with the confidence interval closer to crossing 1 and more uncertainty around the updated outcome.

It’s notable that the studies showing physician benefit all have significant effects while there is more uncertainty in those showing no benefit. Given the inclusion of the duplicate paper and the questionable decision to include the French paper, I’m not sure how much confidence we can have in the filtering process for which articles were included.

Inconclusions: Challenges of meta analysis

Meta-analysis is the highest standard of evidence according to Cochrane, as in theory it combines multiple noisy studies to average over the randomness we expect to observe in any single study effect. However, it is not a magic tool that automatically delivers the correct answer just by pooling together a bunch of studies.

I am hesitant to either fully confide or dismiss the results of this analysis without exploring three factors further:

Which studies are included—and, equally important, which are excluded.

Whether the ‘standard’ care comparator is truly comparable across different healthcare systems and scopes of practice. This is a question best answered by practitioners.

Which outcomes are examined—I only analysed 30-day mortality, but survival outcomes in this study showed a stronger effect in favour of physician-led teams.

I don’t want to rag on the authors too much, publishing papers is a pain the ass when working a full time job. All of the authors appear to be either doctors or medical students. That said, I believe there are enough issues here to warrant a skeptical review and a deeper dive into more well-defined comparisons such as clinical scope, actual interventions applied, or level of training.

A more rigorous study is needed to ensure that valid comparisons are made between physician-led and paramedic-led care. Even if this study’s effect is real, the key question remains: What do we do with this information? How feasible and cost-effective is it to deploy more doctors in pre-hospital settings?

Bonus: R code and data

Data in CSV format

name,logOR,SE

"De Jongh et al., 2012",0,0.2606

"Endo et al., 2020",-0.139,0.059

"Endo et al., 2021",-0.1278,0.0615

"Garner et al., 2015",0.0488,0.2369

"Hepple et al., 2019",0.131,0.3812

"Lyons et al., 2021",-0.462,0.2192

"Maddock et al., 2020",-0.5798,0.2254

"Pakkanen et al., 2019",-0.637,0.229

"Yeguiaayan et al., 2011",-0.5978,0.2763install.packages("metafor")

library(metafor)

df = read.csv("Downloads/meta_data.csv")

res1 <- rma(yi = logOR, sei = SE, data=df)

res2 <- rma(yi = logOR, sei = SE, data=df[-c(2, 9), ], method="REML")

print(res1)

print(res2)

predict(res1, transf=exp, digits=2)

predict(res2, transf=exp, digits=2)

forest(res1,

atransf=exp,

header=c("Study"),

showweights=TRUE,

slab=

df$name)

text(-2.5, 11, pos=4, cex=0.75, bquote(paste(

"RE Model (Q = ", .(fmtx(res1$QE, digits=2)),

", df = ", .(res1$k - res2$p), ", ",

.(fmtp2(res1$QEp)), "; ",

I^2, " = ", .(fmtx(res1$I2, digits=1)), "%, ",

tau^2, " = ", .(fmtx(res1$tau2, digits=2)), ")")))

forest(res2,

atransf=exp,

header=c("Study"),

showweights=TRUE,

slab=

df[-c(2, 9), ]$name)

text(-2.5, 9, pos=4, cex=0.75, bquote(paste(

"RE Model (Q = ", .(fmtx(res2$QE, digits=2)),

", df = ", .(res2$k - res2$p), ", ",

.(fmtp2(res2$QEp)), "; ",

I^2, " = ", .(fmtx(res2$I2, digits=1)), "%, ",

tau^2, " = ", .(fmtx(res2$tau2, digits=2)), ")")))